[Platform for Audiovisual General-purpose ANotation]

Overview

PAGAN can run in any browser and only requries a desktop computer with a conventinal keyboard for the annotator application to work. The framework consists of an administrative dashboard for researchers (accessible under My Projects) and a separate participant interface to minimise distraction during the annotation process.

Participant Interface & Annotator Application

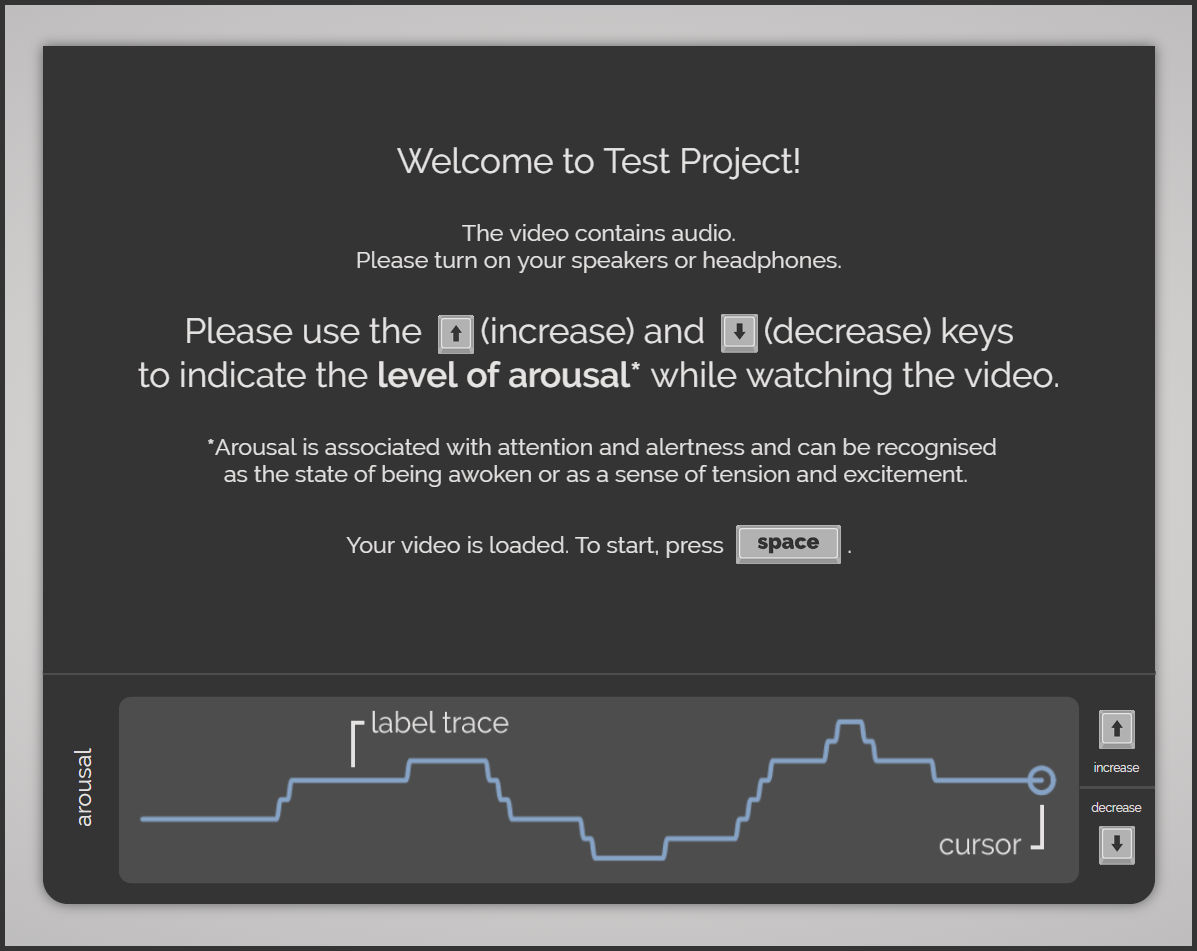

Greeting screen in the beginning of the annotation.

Greeting screen in the beginning of the annotation.

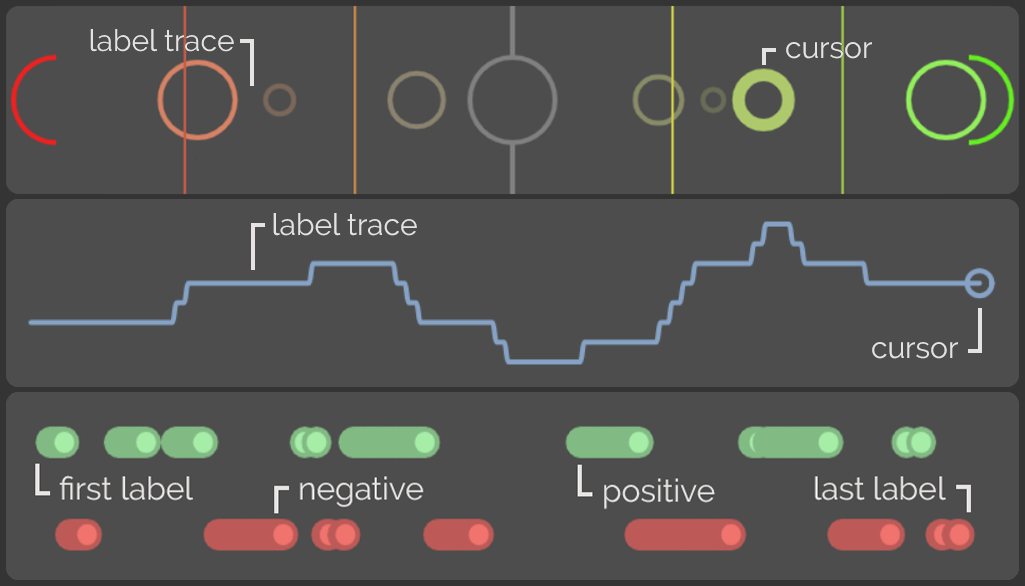

PAGAN supports three different kind of annotation frameworks, GTrace1, based on a popular annotation protocol in affective computing, RankTrace2, an intuitive solution for ordinal affect annotation, and BTrace3 a simplified binary labelling tool based on AffectRank.

From top to bottom: GTrace, RankTrace, and BTrace interfaces.

From top to bottom: GTrace, RankTrace, and BTrace interfaces.

Test Application

A test application can be found here: test it out

The test only needs a title, annotation target, annotaton type (see image above), a YouTube source, and whether the videos are played with sound. After the annotation is done, the log file is automatically served and downloaded on the annotator's computer. The test application can be used freely in research project where the researcher is present and sets up the system for the participant manually before annotation.

Administrative Dashboard & Project Creation

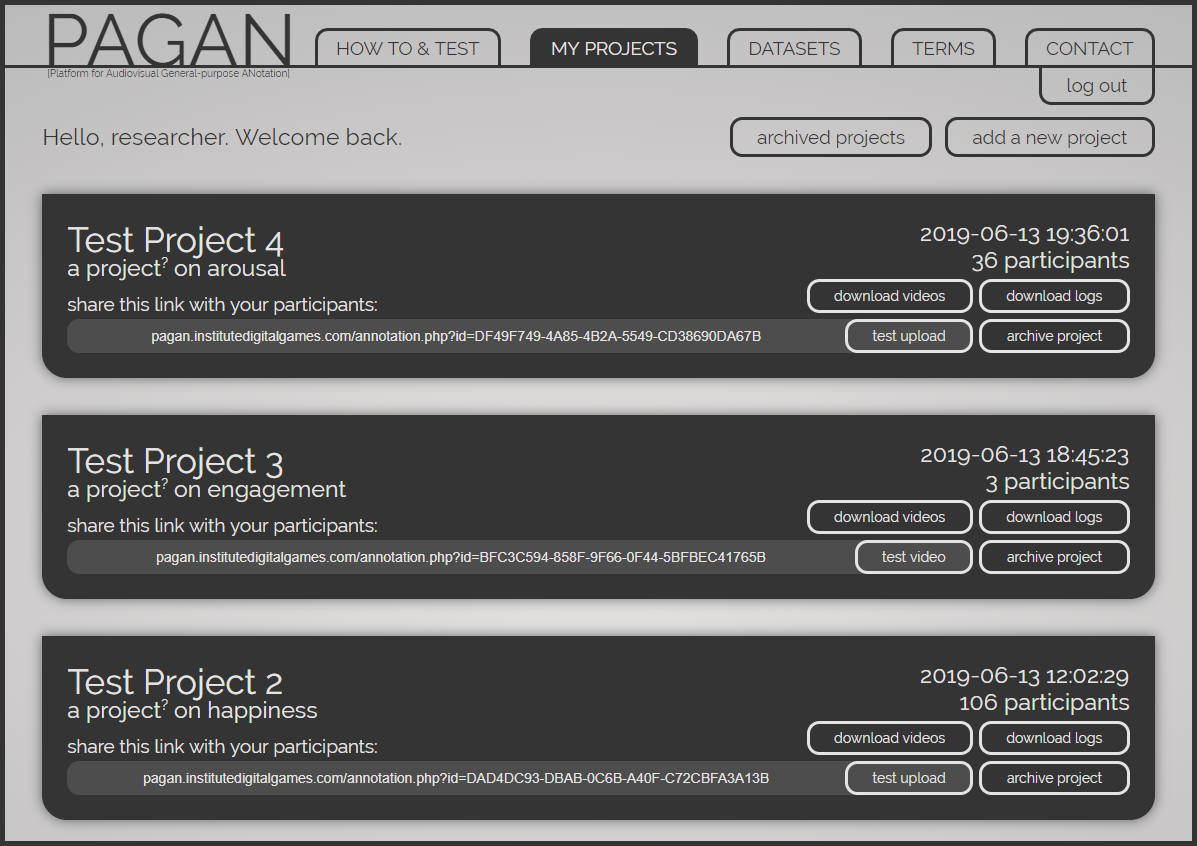

Advanced users can use the administrative dashboard to create new projects and manage existing ones, accessing the collected annotation logs. Registered researchers have the option to upload videos instead of using YouTube link and can task their participants with uploading or linking to videos. This way PAGAN can accommodate different research protocols and setups.

Administrative dashboard, projects overview.

Administrative dashboard, projects overview.

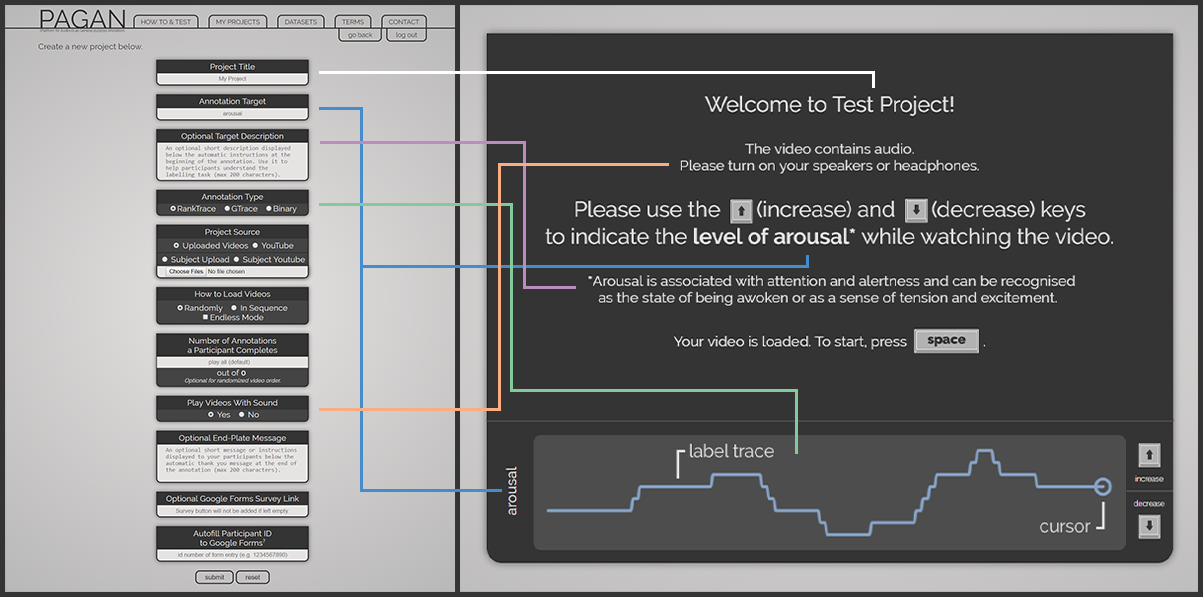

PAGAN is highly customizable, with many different options regarding the setup and information shared with the participants.

Project creation and corresponding annotator interface when uploading videos.

Project creation and corresponding annotator interface when uploading videos.

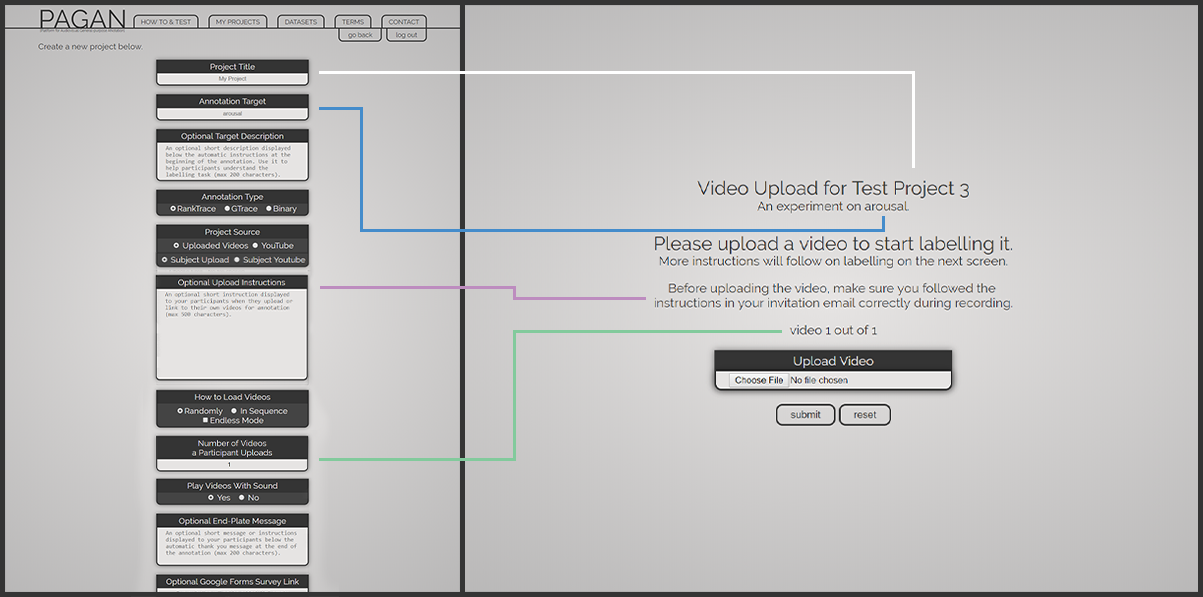

Project creation and corresponding annotator interface when tasking the participants to upload videos.

Project creation and corresponding annotator interface when tasking the participants to upload videos.

Finally, PAGAN also supports a light integration into Google Forms, which can help the platform fit more seamlessly into larger experimental designs.

- R. Cowie, M. Sawey, C. Doherty, J. Jaimovich, C. Fyans, and P. Stapleton, “Gtrace: General trace program compatible with emotionml,” in 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction. IEEE, 2013, pp. 709–710.

- P. Lopes, G. N. Yannakakis, and A. Liapis, “RankTrace: Relative andunbounded affect annotation,” in Proceedings of the International Conference on Affective Computing and Intelligent Interaction. IEEE, 2017, pp. 158–163.

- G. N. Yannakakis and H. P. Martinez, “Grounding truth via ordinalannotation,” in Proceedings of the International Conference on Affective Computing and Intelligent Interaction. IEEE, 2015, pp. 574–580.